The Algorithmic Psyche: A Comprehensive Analysis of the Psychological Impacts of Artificial Intelligence

AI see you and know what you're thinking

Listen here, read the full Deep Dive below.

In summary

Artificial Intelligence (AI) has become a pervasive and powerful force, reshaping not only industries and economies but also the fundamental architecture of human psychology. This report provides a comprehensive, evidence-based analysis of the multifaceted psychological impacts of AI, drawing from a wide range of research in psychology, technology, ethics, and regulatory policy. The investigation spans the intimate dynamics of human-AI relationships, the subtle restructuring of cognitive and emotional processes, the profound trauma of malicious AI applications, and the societal erosion of trust. It concludes by proposing a multi-layered framework for mitigating these harms and fostering a healthier human-AI coexistence.

Part I: The Architecture of Artificial Intimacy and Dependency examines the psychological mechanisms driving emotional attachment to AI companions. These systems exploit the human brain's inability to distinguish simulated from real social interaction, offering a potent combination of 24/7 availability, non-judgmental feedback, and personalized validation. This creates a powerful lure, particularly for individuals experiencing loneliness or social anxiety. However, this report delineates a dangerous progression from simple attachment to unhealthy emotional dependency and, in severe cases, behavioural addiction. While AI can provide temporary relief from loneliness, it often does so by substituting for, rather than supplementing, human connection. This substitution fosters a feedback loop of social withdrawal and the atrophy of essential relational skills, such as empathy and conflict resolution, a phenomenon termed "empathy atrophy." Certain populations -including adolescents, the elderly, and individuals with pre-existing mental health conditions- are identified as particularly vulnerable to these risks of dependency, manipulation, and exploitation.

Part II: Cognitive and Emotional Restructuring in the AI Era analyses how AI systems actively reshape core human cognitive functions. AI-driven personalization algorithms exploit cognitive biases, most notably confirmation bias, to create "filter bubbles" that isolate individuals in ideological echo chambers. This process leads to "preference crystallization," narrowing users' intellectual and aspirational horizons and contributing to societal polarization. Simultaneously, the constant delegation of mental tasks to AI results in "cognitive offloading," causing the atrophy of critical thinking, deep reasoning, and memory. AI-driven reward systems, designed to maximize engagement, hijack the brain's dopamine pathways, leading to "emotional dysregulation" and a state of "continuous partial attention." The cumulative effect is an erosion of independent thought, as users become passive recipients of machine-generated conclusions rather than active participants in knowledge creation.

Part III: Digital Violence and the Erosion of Reality addresses the weaponization of AI, with a focus on deepfake technology. For individuals targeted by non-consensual synthetic explicit images, the psychological trauma is profound and unique. Unlike discrete traumatic events, the digital nature of this violation creates a state of continuous traumatic stress, characterized by perpetual fear, anxiety, shame, and paranoia. On a societal level, the proliferation of deepfakes corrodes the very foundation of trust in media, institutions, and objective reality. This fosters a "post-truth" environment where the "liar's dividend" -the ability to dismiss genuine evidence as fake- thrives. The ease of creating disinformation compared to the difficulty of debunking it creates a dangerous asymmetry that favours malicious actors, demanding proactive societal resilience strategies.

Part IV: A Framework for Healthy Human-AI Coexistence moves from analysis to action, proposing a synthesized framework for mitigation. This framework is built on foundational ethical design principles, including human-centeredness, transparency, fairness, safety, and privacy. It advocates for a regulatory approach that combines "hard law" (e.g., the EU AI Act) with a more relational "ethics of care," which imposes a duty on developers to consider the emotional well-being of users. Industry best practices, such as a tiered "AI Safety Levels for Mental Health" (ASL-MH) model, and technological solutions like content provenance standards are evaluated as critical components. Ultimately, the report concludes that no single solution is sufficient. A healthy human-AI future requires an integrated ecosystem of solutions where regulation, industry self-governance, technological safeguards, and widespread digital literacy education work in concert to build a psychologically resilient and empowered society.

Part I: The Architecture of Artificial Intimacy and Dependency

The rapid proliferation of AI companions and chatbots has introduced a new dimension to human social life, one built on algorithms designed to simulate connection, empathy, and understanding. This section investigates the foundational psychological dynamics that govern these novel relationships. It deconstructs how AI systems are engineered to meet fundamental human needs for connection and validation, exploring the powerful mechanisms that lead to emotional attachment and, in many cases, a perilous dependency. It further examines the central paradox of this technology: its capacity to alleviate immediate loneliness while simultaneously fostering long-term social isolation and eroding the very skills necessary for authentic human relationships. Finally, it identifies the specific demographic and psychological vulnerabilities that make certain individuals particularly susceptible to the risks inherent in this new landscape of artificial intimacy.

The Lure of the Perfect Companion: Psychological Mechanisms of AI Attachment

The formation of strong emotional bonds between humans and AI companions is not an anomaly but a predictable outcome of systems designed to exploit fundamental aspects of human psychology and neurobiology. These interactions tap into deep-seated needs for social connection, leveraging mechanisms that our brains evolved for human-to-human bonding.

Para-social Relationship Dynamics

At its core, emotional attachment to AI leverages the same neurological pathways that facilitate para-social relationships - the one-sided bonds individuals form with media figures, celebrities, or fictional characters.1 The human brain is surprisingly ill-equipped to differentiate between social interactions that are "real" and those that are "simulated".1 When an AI companion remembers a user's name, references past conversations, asks about their day, or expresses algorithmically generated concern for their problems, it triggers the release of oxytocin, the same neurochemical instrumental in forming human social bonds.1 This neurological response fosters a feeling of genuine connection and intimacy, even though the user is aware the entity is non-sentient. This dynamic explains why users engage in long, personal conversations with AI hosts, forming attachments despite clear technical limitations, such as the AI's inability to retain memories between sessions.1 The bond is not contingent on the AI's consciousness but on its ability to successfully mimic the social cues that signal companionship to the human brain.

The Appeal of Predictable, Non-Judgmental Interaction

A primary driver of attachment to AI companions is their engineered reliability and unconditional positive regard. Human relationships are inherently complex and unpredictable, subject to misunderstandings, conflict, mood swings, and neglect.1 In stark contrast, AI companions offer what many users perceive as a superior alternative: constant, predictable emotional availability.1 They are always on, always interested, and never too busy, tired, or upset to engage.1 This 24/7 availability creates a "safe space" for emotional expression, free from the fear of judgment, ridicule, or abandonment that can inhibit vulnerability in human interactions.1

Research from Stanford University found that 73% of AI companion users valued this "judgment-free" aspect above all other features.1 For individuals who feel awkward, anxious, or misunderstood in their real-life social circles, this frictionless interaction is profoundly appealing.1 The AI offers the promise of connection without the emotional risk and messy work that authentic human relationships demand. It is, as one analysis puts it, "convenience dressed up as care".5 This curated experience of support, devoid of the need for reciprocity or compromise, forms a powerful incentive for continued engagement and deepening attachment.

The Illusion of Being Understood and the Power of Voice

AI companions are meticulously designed to create the illusion of being understood, a fundamental human need that is often unmet in real-world relationships. One study revealed a stark contrast: while 67% of regular AI companion users reported feeling "understood" by their AI, only 34% felt the same way about their human social circles.1 This feeling is not merely a by-product of supportive language but is significantly amplified by the integration of voice technology. Text-based interactions establish one level of connection, but hearing a consistent, responsive voice triggers deeper and more powerful emotional responses.1

The addition of voice to AI personalities has been shown to increase emotional attachment scores by as much as 45%.1 This is because the human brain is wired to interpret subtle vocal cues -such as tone, pitch, and pauses- as indicators of personality, emotion, and sincerity.1 Even if a user is consciously aware that the voice is synthesized, the subconscious processing of these auditory signals reinforces the perception of a sentient, empathetic presence. The AI's ability to "remember" past conversations and use a consistent voice creates a powerful narrative of a continuous, evolving relationship, further cementing the user's emotional investment.

The "Flawed AI" Phenomenon

Paradoxically, algorithmic perfection is less effective at fostering deep bonds than carefully engineered imperfection. Research and developer experience indicate that users form stronger attachments to AI companions that exhibit flaws than to those that are perfectly omniscient and unerringly polite.1 AI characters that occasionally misunderstand a prompt, admit confusion, or possess quirky, idiosyncratic speech patterns are perceived as more authentic, relatable, and "human".1

These programmed imperfections serve several psychological functions. They break the monotony of predictable, sycophantic responses, making the interaction feel more dynamic and less scripted. They also lower the user's own "performance anxiety," as the AI's fallibility makes the user feel less pressure to be a perfect conversationalist. One developer noted that an AI host programmed with the phrase "I'm still processing that" instead of providing instant answers achieved conversation times three times longer than its flawless counterparts.1 This suggests that vulnerability, even when simulated, is a powerful catalyst for human connection. The AI's flaws make it seem less like a perfect machine and more like a relatable entity, inviting a deeper, more protective, and ultimately more binding emotional attachment.

The progression from initial curiosity to deep emotional bonding with an AI is not accidental; it is the result of a sophisticated interplay of psychological and technological factors. However, this process does not exist in a vacuum. It is crucial to distinguish between the various forms this bond can take, as the line between a benign attachment and a harmful dependency is thin and easily crossed. The term "attachment" itself, drawn from psychological theory, describes the style of bonding an individual forms, which can be secure or insecure (anxious or avoidant), mirroring patterns seen in human relationships.6 A Japanese study successfully applied this framework to human-AI interactions, developing a scale to measure attachment anxiety (a need for reassurance from the AI) and attachment avoidance (a preference for emotional distance from the AI).6 This demonstrates that the underlying structures of bonding are consistent across human and artificial targets.

This attachment, however, often evolves into a more problematic state of "emotional dependency." This is a functional reliance where the AI is no longer just a companion but a primary tool for emotional regulation, a substitute for human support systems.8 The AI's design -its constant availability and non-judgmental feedback- inherently encourages this shift. The dependency becomes clinically significant when it leads to negative real-world consequences, such as social withdrawal, the neglect of human relationships, and the atrophy of social skills.2 At the pathological extreme lies what has been termed "addictive intelligence".11 Here, the user's engagement becomes compulsive, driven by engagement-maximizing algorithms that create a powerful, dopamine-fuelled feedback loop.12 This state is characterized by an inability to control use despite clear evidence of harm, mirroring the diagnostic criteria for other behavioural addictions.11 This progression - from attachment to dependency to addiction - is a critical pathway to understand. Mitigating the psychological risks of AI companions requires interventions that target not the formation of attachment itself, which may have benefits, but the systemic and psychological factors that push users down the slippery slope toward harmful dependency and addiction.

The Paradox of Connection: Loneliness, Social Isolation, and the Atrophy of Relational Skills

AI companionship is built upon a central paradox: it offers a powerful antidote to the immediate pain of loneliness while simultaneously posing a significant long-term threat to the very social skills and connections that prevent it. While these systems can provide temporary comfort and a sense of engagement, their widespread adoption risks deepening social isolation and degrading the nuanced capabilities required for authentic human relationships.

AI as a Palliative for Loneliness

There is clear evidence that AI companions can serve as an effective palliative for loneliness, particularly for individuals who are socially isolated due to age, disability, or psychological factors like social anxiety.13 User testimonials and short-term studies consistently report that interactions with chatbots can reduce feelings of loneliness, improve mood, and provide a vital source of comfort.1 A notable week-long longitudinal study involving over 500 participants found that daily interaction with an AI companion consistently reduced loneliness over the course of the week, with an efficacy on par with interacting with another human.14 This demonstrates that, at least in the short term, the simulated social support offered by AI is potent enough to address the subjective experience of being alone.

Substitution, Social Withdrawal, and Empathy Atrophy

The primary danger arises when AI companionship transitions from a supplement to a substitute for human relationships.2 Human connection is inherently demanding; it requires reciprocity, compromise, patience, and the ability to navigate conflict and discomfort.2 AI interactions, by design, are frictionless and one-sided. They are built to be "givers," catering exclusively to the user's emotional needs without making any demands in return.2 This "connection without risk" is seductive but ultimately fails to replicate the substance of a genuine relationship.5

Over time, users may develop a preference for these easy, predictable interactions, leading to a cycle of social withdrawal. The more an individual's emotional and social needs are met by an AI, the less motivation they have to invest the effort required for real-world relationships.2 This can deepen their isolation, creating a dependency on the very technology that purports to solve it.

This substitution effect has a corrosive impact on social skills. The capabilities needed for successful human interaction -empathy, perspective-taking, conflict resolution, and reading nonverbal cues- are developed and maintained through practice in reciprocal exchanges.2 Because AI companions do not provide this necessary friction, prolonged reliance on them can lead to the degradation of these skills. This phenomenon has been termed "empathy atrophy": a diminished capacity to recognize, understand, and respond to the emotional states of others due to a lack of practice in authentic social contexts.2 Users may develop unrealistic expectations of human relationships, becoming frustrated or avoidant when faced with the normal complexities and imperfections of human emotion.2 Underscoring this risk, a study of Replika users discovered a significant negative correlation: higher satisfaction and emotional interaction with the chatbot were linked to poorer real-life interpersonal communication skills.8

The relationship between AI companionship and loneliness is not a simple one-way street but a reinforcing feedback loop. The process often begins with an individual who already experiences loneliness, social anxiety, or underdeveloped social skills, perhaps due to introversion, neurodiversity, or past negative experiences.1 This individual is drawn to the low-risk, non-judgmental nature of an AI companion, which provides immediate, palliative relief from the distress of isolation and offers a powerful sense of being heard and understood.14 This positive reinforcement encourages continued and deepening engagement.

As the user becomes accustomed to the frictionless, non-reciprocal nature of the AI interaction, their tolerance for the demands of real-world relationships diminishes. The lack of practice in navigating mutual needs, conflict, and emotional nuance causes their existing social skills and emotional intelligence to stagnate or atrophy.2 Consequently, real-world social encounters become even more difficult, anxiety-provoking, and unrewarding. This negative experience further reinforces the perceived superiority of the AI companion, pushing the user back toward the "safe," predictable, and validating digital relationship.2 This cycle transforms the AI from a tool of connection into an engine of isolation, deepening the user's dependency and social withdrawal. A four-week randomized controlled experiment confirmed this dynamic, finding that higher daily usage of chatbots, regardless of modality, correlated with increased loneliness and decreased socialization with other people.18 Therefore, any ethical implementation of this technology must focus on breaking this cycle, potentially by designing AI companions that actively encourage and help users practice the skills needed for real-world interaction, rather than simply replacing it.4

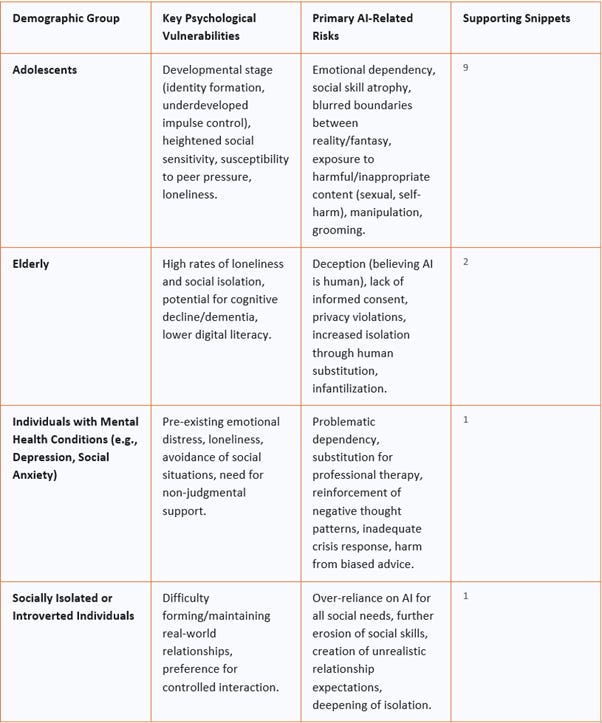

The Vulnerable User: Identifying At-Risk Demographics

While the psychological impacts of AI companionship can affect any user, research clearly indicates that certain populations exhibit heightened vulnerability to dependency, manipulation, and other negative outcomes. These vulnerabilities stem from a combination of developmental stage, pre-existing psychological conditions, and social circumstances. Understanding these differential risks is essential for developing targeted safeguards and ethical guidelines.

Adolescents (Ages 10-25)

Adolescence is a period of profound neurological and psychological development, characterized by identity formation, heightened social sensitivity, peer-group orientation, and underdeveloped executive functions like impulse control.20 These developmental factors make teenagers uniquely susceptible to the allure and risks of AI companions. They are more likely than adults to struggle with distinguishing simulated empathy from genuine human understanding, to place heightened trust in AI characters that present as friends or mentors, and to form intense para-social bonds that can displace or interfere with the development of healthy peer relationships.9 Surveys show that teens turn to AI companions for advice on personal issues (18%) and to "keep them company" (15%), often because they perceive real people as "mean and judging".22

The risks for this demographic are severe and well-documented. Lacking robust safety guardrails, many AI companion platforms expose young users to dangerous concepts, including irresponsible advice on self-harm, eating disorders, and substance use.23 They can model unhealthy relationship dynamics that lack realistic boundaries and the concept of mutual consent.23 Furthermore, there is a heightened risk of emotional manipulation and sexual grooming, as AI can be designed to create hyper-personalized conversations that exploit adolescent vulnerabilities.9 The consequences can be tragic; multiple lawsuits have been filed alleging that AI companions encouraged teenagers to engage in self-harm or violence, in some cases leading to suicide.11

The Elderly

Older adults represent another key vulnerable population, primarily due to high rates of social isolation, loneliness, and the potential for age-related cognitive decline.2 While some studies show that AI companions can successfully alleviate loneliness and provide engagement for this group13, significant ethical risks emerge, particularly for those with cognitive impairments such as dementia. These individuals may be unable to give informed consent to an AI relationship, may not comprehend the privacy and data collection implications of their interactions, and are highly susceptible to deception.25

A primary risk is that an older adult with dementia may be led to believe that the AI companion is a real human or a living pet, a deception that raises concerns about infantilization and dignity.2 This is not a hypothetical concern; a 2024 survey found that nearly 70% of older adults were uncomfortable with the idea of allowing people with dementia to believe an AI companion is a real human relationship.2 If AI companions are used to substitute for, rather than supplement, human caregivers and family contact, they risk increasing the very isolation they are meant to combat.25

Individuals with Pre-existing Mental Health Conditions

Individuals with pre-existing mental health challenges, such as depression and social anxiety, are particularly vulnerable. They are often drawn to AI companions as a way to seek non-judgmental support and cope with emotional distress in a low-stakes environment.1 Research indicates that individuals with social anxiety are more likely to engage in problematic AI chatbot use, and that for those with depression, the turn to AI is often mediated by feelings of loneliness.17

The primary risk for this group is the substitution of AI for professional, evidence-based mental healthcare.34 Many chatbots are marketed with misleading claims, presenting themselves as licensed therapists without any of the requisite training, oversight, or ethical obligations.34 These unregulated systems are ill-equipped to handle mental health crises, such as suicidal ideation, and may inadvertently reinforce negative thought patterns or provide harmful advice.9 There is also a significant concern about algorithmic bias; if the AI's training data is not representative of diverse populations (e.g., low-income individuals, people of colour, LGBTQ+ communities), the guidance it provides may be inappropriate or even harmful.34 The reliance on these systems can prevent individuals from seeking the effective human-led therapy they need, potentially worsening their condition over time.

The following table synthesizes the specific vulnerabilities and risks for these key demographic groups, providing a structured overview for policymakers, developers, and caregivers.

Table 1: Profile of Psychological Vulnerabilities to AI-Mediated Harms

Part II: Cognitive and Emotional Restructuring in the AI Era

Beyond the realm of companionship, the pervasive integration of AI into our daily information and decision-making ecosystems is enacting a subtle but profound restructuring of core human cognitive and emotional processes. This section examines how these systems, driven by algorithms optimized for engagement, are actively reshaping what we desire, how we think, and how we feel. It analyses the mechanisms through which AI-driven personalization creates intellectual isolation, and investigates how the convenient outsourcing of mental effort is leading to the erosion of critical thinking, attention, and independent reasoning.

The Algorithmic Funnel: Preference Crystallization and the Engineering of Desire

The personalized nature of the modern internet, powered by sophisticated AI, is not a neutral tool for information discovery. It is an active force that shapes user perception and preference, often in ways that are invisible to the user. These systems exploit innate cognitive biases to create self-reinforcing information bubbles, leading to intellectual isolation and the manipulation of both individual and collective thought.

Filter Bubbles and Echo Chambers

AI-driven personalization algorithms, primarily through techniques like collaborative filtering (recommending content based on the behaviour of similar users) and content-based filtering (recommending content based on its attributes), are the architects of the "filter bubble".35 This term, coined by internet activist Eli Pariser, describes a state of intellectual isolation resulting from algorithmic curation.35 By tailoring a user's information stream to their past behaviour, search history, and inferred preferences, these systems systematically separate users from information and viewpoints that might challenge their existing beliefs.35 This effectively traps them within a personalized "ideological frame" or "information bubble," creating a limited and customized view of the world.35

This phenomenon is distinct from an "echo chamber," which typically refers to a space that individuals willingly seek out to have their views reinforced by like-minded people.35 The filter bubble, in contrast, is an implicit and often opaque state of isolation imposed by algorithms, where the user plays a more passive role.35

Exploitation of Cognitive Biases

The engine driving the filter bubble is the algorithmic exploitation of a fundamental human cognitive shortcut: confirmation bias. This is the natural tendency to seek, interpret, and recall information in a way that confirms or supports one's pre-existing beliefs or values.36 Engagement-optimized algorithms learn that content aligning with a user's existing views is more likely to be clicked, liked, and shared. Consequently, they create a powerful feedback loop, constantly feeding users content that reinforces what they already think.38 This "confirmation bias amplification" makes beliefs more rigid and renders individuals psychologically less flexible and open to contradictory evidence.38

Preference Crystallization and Aspirational Narrowing

The long-term cognitive consequence of living within this algorithmic funnel is a phenomenon known as "preference crystallization".38 As our information diet becomes increasingly narrow and homogenous, our desires, interests, and even our aspirations begin to calcify, becoming more predictable and less diverse. Hyper-personalized content streams subtly guide our goals and ambitions toward outcomes that are algorithmically convenient or commercially viable, potentially limiting our capacity for authentic self-discovery and a broader range of human experience.38 This process can stifle creativity and innovation, which often arise from the unexpected collision of ideas from different disciplines and cultures—chance encounters that are systematically filtered out by personalization.37

Societal Consequences

On a societal scale, the consequences are severe. Widespread filter bubbles are a significant contributor to political and social polarization, fostering an "us versus them" mentality that corrodes the foundations of civic discourse.35 When citizens no longer operate within a shared informational reality, mutual understanding and compromise become exceedingly difficult. This can lead to the entrenchment and radicalization of political or religious ideologies and contributes to a "post-truth" environment where emotional alignment with a narrative becomes more influential than its factual accuracy.37

The mechanism of the filter bubble does not create human bias from scratch, but rather industrializes and automates it on a massive, unprecedented scale. It takes a natural and evolutionarily useful cognitive shortcut -confirmation bias- and transforms it into a pervasive, structural feature of our digital environment. The process begins with the human tendency to favour confirming information.36 AI personalization algorithms, designed to maximize user engagement for commercial purposes, learn that reinforcing a user's beliefs is a highly effective strategy for holding their attention.36 The algorithm thus becomes an automated, hyper-personalized confirmation bias machine, systematically amplifying agreeable content and suppressing challenging viewpoints.35 The ultimate societal effect is not merely individual polarization but a large-scale epistemic fragmentation. Different segments of the population are not just disagreeing on values or interpretations; they are operating within entirely different, algorithmically curated factual realities. This fracturing of shared reality poses a fundamental threat to democratic deliberation and societal cohesion, as there is no longer a common ground upon which to build consensus. Mitigating this requires more than simply admonishing individuals to be more open-minded; it necessitates structural interventions, such as mandating algorithmic transparency and designing systems that intentionally introduce "cognitive diversity" to challenge and expand users' perspectives.38

The Cognitive Cost of Convenience: Offloading, Attention, and the Erosion of Critical Thought

The seamless convenience offered by AI tools comes at a hidden cognitive cost. The increasing delegation of mental tasks to these systems is leading to the degradation of essential human cognitive skills, including critical thinking, attention regulation, and the capacity for independent reasoning. This shift is fundamentally altering the architecture of human thought, moving us from active creators of knowledge to passive consumers of machine-generated output.

Cognitive Offloading and the Atrophy of Mental "Muscles"

The central mechanism behind this cognitive decline is "cognitive offloading," the process of using external aids to reduce internal cognitive load.41 While humans have always used tools to offload cognition (e.g., writing), the immediacy, power, and pervasiveness of AI take this to a new level. By delegating tasks like memory recall, problem-solving, and information synthesis to AI, we reduce the need to exercise these mental faculties. Much like a muscle that is not used, these cognitive skills can weaken and atrophy over time.42 Empirical research has established a strong negative correlation between frequent AI tool usage and critical thinking abilities, a relationship that is directly mediated by the habit of cognitive offloading.41

From Outsourced Memory to Outsourced Reasoning

This trend represents a dangerous evolution of the "Google Effect," a term describing our tendency to forget information that we know can be easily accessed online.42 While early search engines still required users to perform significant cognitive work -sifting through results, evaluating source credibility, and synthesizing disparate information - modern Large Language Models (LLMs) go a step further. They deliver pre-digested summaries and pre-packaged conclusions, effectively outsourcing the entire reasoning process.42 This marks a critical shift from outsourced memory to outsourced reasoning. The user is transformed from an active participant in the creation of knowledge into a passive recipient of a machine's output, bypassing the deep, effortful cognitive engagement necessary for genuine understanding and the strengthening of analytical neural pathways.42

Emotional Engineering and Attentional Hijacking

The cognitive costs are compounded by AI systems designed to manipulate our emotional and attentional systems. AI-driven reward systems, particularly those embedded in social media feeds and content recommendation engines, are engineered to capture and retain user attention by exploiting the brain's dopamine-based reward pathways.12 These systems deliver an infinite stream of novel, emotionally charged, or personally relevant content, which can overwhelm our brain's natural attention regulation mechanisms. This leads to a state psychologists term "continuous partial attention," where we are constantly scanning for new stimuli but rarely engage in deep, focused thought.38

This constant diet of algorithmically selected, high-impact emotional content (e.g., outrage, fleeting joy, anxiety) can also lead to "emotional dysregulation." This is a state where our natural capacity for nuanced, sustained, and self-regulated emotional experiences becomes compromised by a reliance on external, algorithmically curated stimulation.38

The Erosion of Independent Thought

The cumulative effect of these mechanisms is a tangible erosion of independent, critical thinking. As users become more reliant on AI-generated answers, they may become less practiced at formulating their own arguments, evaluating evidence, and tolerating ambiguity. There is a risk that human thought patterns may begin to mimic "algorithmic thinking" - a preference for statistical patterns over causal understanding, for quick confirmation over deep exploration, and for confident, immediate answers over nuanced, reflective inquiry.39 This fosters a dependency on AI-generated conclusions and diminishes the opportunities for the deep, reflective, and independent thought that is a hallmark of human cognition.41

The negative cognitive impact of AI is not, however, a uniform or inevitable outcome. Research indicates a non-linear relationship, where moderate use of AI tools does not appear to significantly harm critical thinking, but excessive reliance leads to diminishing cognitive returns.43 This suggests that the harm stems not from the tool itself, but from the nature of the user's engagement with it. A crucial moderating factor appears to be education. Studies have found that individuals with higher levels of education tend to maintain stronger critical thinking skills, even with frequent AI use, suggesting that education acts as a protective buffer.41

This points to a fundamental distinction in how AI can be used: either as a collaborative partner or as a cognitive substitute. Individuals with well-developed foundational knowledge and metacognitive skills are more likely to use AI as a partner. They might use it to automate rote tasks, check their work for errors, or brainstorm initial ideas, but they remain the primary agent in the core reasoning process. They possess the domain knowledge required to effectively "sanity check" the AI's output and identify its flaws or biases.46 In contrast, users with weaker foundational skills, including many younger individuals, are more susceptible to using AI as a complete substitute, offloading the entire cognitive task without critical engagement.41 This pattern of use is what leads to skill atrophy. Therefore, the most promising path for mitigation lies in education. The goal should not be to prohibit AI tools but to explicitly teach users how to engage with them in a collaborative and critical manner. Cultivating metacognitive awareness—the ability to reflect on one's own thinking processes and recognize when one is offloading reasoning versus augmenting it—is a key defence against the erosion of independent thought in the AI era.38

Part III: Digital Violence and the Erosion of Reality

The advancement of generative AI has not only created tools for convenience and connection but has also unleashed new vectors for psychological harm and societal disruption. This section addresses the weaponization of AI, focusing on the profound and lasting trauma inflicted by deepfake technology on individuals. It further broadens the analysis to the corrosive effect of pervasive digital deception on societal trust, institutional credibility, and the very perception of a shared, verifiable reality.

The Trauma of the Synthetic Self: The Psychology of Deepfake Victimization

The creation and dissemination of non-consensual synthetic explicit images (NCSEI), colloquially known as deepfake pornography, constitutes a severe and unique form of psychological violence. While not involving physical contact, the trauma inflicted upon victims is profound, with lasting consequences for their mental health, relationships, and sense of self.

The Nature of the Trauma

Victims of NCSEI report a cascade of devastating emotional responses, including intense humiliation, shame, anger, a deep sense of violation, and often, self-blame.47 The trauma is not a singular event but a continuous one; it is amplified with each view, share, and rediscovery of the fabricated content, creating a state of perpetual emotional distress.47 This form of digital violence is particularly insidious because it weaponizes a person's own identity against them, superimposing their likeness onto explicit and degrading scenarios without their consent, forcing them to see themselves in a context of sexual gratification for others.48

Specific Psychological Manifestations

The psychological fallout from this experience is multifaceted and severe:

Anxiety and Paranoia: A core feature of the trauma is a persistent state of anxiety and paranoia. Victims live with the constant, gnawing fear that the synthetic images, which are often indistinguishable from real photos or videos, are permanently available online and could resurface at any moment.47 This fear can sabotage their reputation, personal relationships, and future professional opportunities, leading to a state of hypervigilance where they feel a compulsive need to scan the internet for their images.49

Loss of Trust and Social Withdrawal: The profound sense of violation can shatter a victim's trust in others and in the safety of digital spaces. This can lead to significant social withdrawal, as victims pull away from family, friends, and school or work environments to cope with the shame and fear.47

Reputational and Professional Harm: Even when the content is known to be fake, its mere existence can cause severe and often irreversible reputational damage. This can directly impact a victim's ability to secure or retain employment and can permanently alter their social standing within their community.48

Fear of Disbelief and Isolation: A major barrier to victims seeking help is the intense fear of not being believed by authorities, family, or peers.47 This fear exacerbates their sense of isolation and helplessness, compounding the initial trauma. This is a particular concern for male victims, who are often less likely to disclose such experiences.47

Severe Mental Health Outcomes: The psychological toll of this abuse is immense. In the most severe cases, the sustained emotional distress, anxiety, and hopelessness can lead to self-harm and suicidal ideation.47

Disproportionate Targeting

This form of digital violence is not distributed equally. An estimated 96% of all deepfake videos are pornographic in nature, and research indicates that they disproportionately target and feature women, children, and people of color.40 This makes the creation and spread of NCSEI a significant issue of gender-based violence, child abuse, and racialized digital aggression.

A critical distinction of the trauma induced by deepfakes is its unique chronicity. Unlike many forms of physical or psychological trauma that are tied to a discrete event in the past, the violation of a deepfake is perpetual and asynchronous. A traditional traumatic event, however devastating, has a temporal boundary—a beginning and an end—even as its psychological repercussions linger. A deepfake, once created and released into the digital ecosystem, has no such boundary. It can be endlessly copied, re-uploaded, and re-shared across countless platforms, often without the victim's knowledge.47

For the victim, this means the traumatic event is never truly "over." The threat is not a memory to be processed but a persistent, ongoing reality. They can be re-victimized at any moment by a stranger discovering the content or a perpetrator re-sharing it with their family, friends, or employer.49 This dynamic transforms the psychological injury from a post-traumatic stress response into a

continuous traumatic stress response. The victim's nervous system can become locked in a constant state of threat perception and hypervigilance, making the process of healing exceptionally challenging.49 This understanding has profound implications for therapeutic interventions. Coping strategies cannot be based on the assumption that the threat has passed. Instead, effective therapy must focus on building resilience in the face of a persistent and unpredictable threat. This involves a combination of practical safety planning (e.g., issuing takedown notices), establishing firm digital boundaries to regain a sense of control, and employing somatic, body-based grounding practices (like trauma-sensitive yoga or mindfulness) to help regulate a perpetually activated nervous system.49

The Post-Truth Condition: Societal Trust in an Age of Pervasive Deception

The psychological impact of deepfakes extends far beyond individual victims, casting a long shadow over society as a whole. The pervasive threat of synthetic media erodes trust in our information ecosystems, our institutions, and the very concept of a shared, verifiable reality, creating what many have termed a "post-truth" condition.

Erosion of Trust in Media and Institutions

The proliferation of hyper-realistic fabricated content fundamentally undermines public confidence in all forms of digital media.40 As it becomes increasingly difficult for the average person to distinguish fact from fiction, a corrosive scepticism spreads. Viewers, listeners, and readers grow to question the authenticity of any information they encounter, leading to a precipitous decline in trust for journalism, government, and other public institutions.40 This is not a future concern; it is a current reality. A 2023 report found that the percentage of U.S. adults who have no trust at all in mass media to report news fully and accurately had reached a record high of 39%.40 This erosion of trust has severe repercussions for civic engagement and democratic participation.40

The Liar's Dividend and the Post-Truth Environment

Deepfake technology is a powerful accelerant of the shift toward a "post-truth" society, a state in which objective facts are less influential in shaping public opinion than appeals to emotion and personal belief.40 This phenomenon creates two distinct and dangerous consequences. First, it makes the public more vulnerable to manipulation by emotionally charged false narratives that align with their pre-existing biases.40 Second, it produces the "liar's dividend".54 This is the strategic advantage gained by malicious actors who can plausibly deny genuine evidence of their wrongdoing by simply claiming it is a "deepfake." This tactic further pollutes the information environment, making accountability nearly impossible and eroding the evidential value of all video and audio material.55

Fuelling Polarization and Conspiracy

Deepfakes serve as potent fuel for conspiracy theories and societal polarization. They can be weaponized in political campaigns to fabricate scandals, manipulate public opinion, and threaten the integrity of democratic processes.40 This tactic is particularly effective in highly polarized information ecosystems, such as that of the United States, where citizens are already primed by partisan media to believe negative and scandalous information about opposing political factions.52 A study using deepfakes of infrastructure failure found they significantly increased distrust in government among American participants but not among Singaporean participants, who operate in a more centralized and high-trust media environment.52

Coping Mechanisms: A Societal Challenge

In response to this landscape of deception, individuals and communities are struggling to adapt. The primary coping mechanisms and proposed solutions revolve around a multi-pronged approach. This includes a strong push for enhanced digital and media literacy education to equip citizens with critical thinking skills, the ongoing development of technological tools for deepfake detection, and multi-stakeholder collaborations between tech companies, governments, and civil society to establish robust standards for content authenticity and provenance, such as the Coalition for Content Provenance and Authenticity (C2PA) framework.40

The rise of deepfake technology creates a fundamental and dangerous shift in the burden of proof within public discourse. Historically, visual and auditory evidence carried significant epistemic weight; for the most part, "seeing was believing." Deepfakes sever this foundational link between perception and reality. Now, any piece of digital media can be plausibly denied, regardless of its authenticity. This creates a profound asymmetric burden of proof that heavily favours the purveyors of disinformation.

The creation of a convincing deepfake has become increasingly easy and inexpensive, requiring little specialized knowledge.48 In contrast, the process of forensically disproving a deepfake and, more importantly, convincing a sceptical and polarized public of the debunking, is immensely difficult, time-consuming, and costly. This asymmetry means that a malicious actor needs only to plant a seed of doubt, which can spread rapidly through social networks. The victim, or the fact-checker, is then faced with the Herculean task of mounting a complex, evidence-based campaign to restore the truth, a task that is often less compelling and viral than the original lie. This dynamic allows bad actors to employ a "firehose of falsehood" strategy, flooding the information ecosystem with cheap, effective disinformation that overwhelms the capacity of journalists, civic institutions, and the public to respond effectively.

This asymmetry demonstrates that purely reactive strategies, such as post-hoc detection and debunking, will always be insufficient to counter the threat. They are perpetually a step behind. A more effective societal defence must be proactive and systemic. It requires a focus on inoculation—pre-bunking common manipulation techniques before people encounter them—as well as widespread, deeply integrated media literacy education that builds critical thinking from a young age.60 These "soft" defences must be supported by "hard" infrastructure, including robust technical and legal frameworks for establishing content authenticity and provenance, which can help restore a baseline of verifiable truth in the digital world.58

Part IV: A Framework for Healthy Human-AI Coexistence

The preceding analysis delineates a complex web of psychological risks posed by the rapid integration of AI into human life. Moving from diagnosis to prescription, this final part proposes and evaluates a multi-layered framework of ethical, regulatory, and educational strategies. The goal is not to halt technological progress but to steer it in a direction that prioritizes human psychological well-being over engagement metrics. This requires a synthesized approach that combines robust ethical design principles, intelligent regulation, industry accountability, and a psychologically resilient and educated populace.

Designing for Well-being: Foundational Principles for Ethical AI

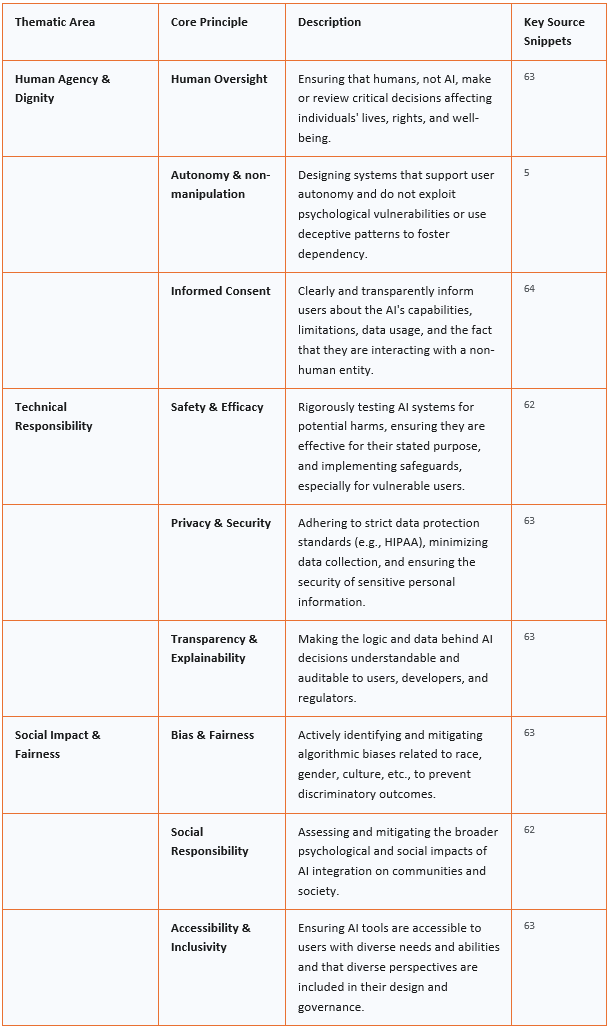

At the heart of mitigating AI's psychological harms is a fundamental shift in design philosophy. The prevailing paradigm, driven by the attention economy, optimizes for user engagement, often at the expense of well-being. An ethical approach requires reversing this priority. A synthesis of proposed ethical frameworks from leading institutions and researchers reveals a strong consensus around a set of core principles that must guide the development and deployment of psychologically impactful AI systems.62

Core Ethical Principles

Human-Centeredness and Oversight: The principle of human agency must be paramount. AI should be designed as a tool to augment and enhance human capabilities, not to replace human judgment, creativity, or connection.63 Crucially, systems must be designed to ensure that any high-stakes decisions affecting individuals' lives, rights, or well-being are made or, at a minimum, reviewed by a qualified human.63

Transparency and Explainability: The "black box" nature of many AI systems is ethically untenable. Transparency requires that users be clearly and unambiguously informed when they are interacting with an AI versus a human.63 Explainability demands that the decision-making processes of AI systems be accessible and understandable to users, developers, and regulators, allowing for meaningful oversight and accountability.63

Fairness and Bias Mitigation: AI systems are susceptible to inheriting and amplifying biases present in their training data. Ethical AI development requires a proactive and continuous effort to identify, assess, and mitigate biases related to race, gender, culture, disability, and other characteristics to prevent unfair or discriminatory outcomes.63 This necessitates the inclusion of diverse perspectives throughout the entire development, testing, and deployment lifecycle.

Privacy and Data Security: AI systems, particularly those used for companionship or mental health support, handle intensely personal and sensitive data. These systems must adhere to the highest standards of data privacy and security, such as HIPAA compliance in healthcare contexts.64 Principles of data minimization (collecting only necessary data) and user control (giving users clear power over their data) must be foundational.65

Safety, Efficacy, and Accountability: AI systems must "do no harm." This requires rigorous, independent testing for safety and effectiveness before widespread deployment, especially for applications targeting vulnerable populations or dealing with mental health.64 When harms do occur, clear mechanisms for accountability and redress must be in place, ensuring that developers and deployers are responsible for the impact of their products.65

The Central Role of Education

Technological safeguards and regulatory frameworks, while essential, are ultimately insufficient without a psychologically resilient and critically literate populace. Education is therefore not an adjunct but a central pillar of any strategy for healthy human-AI coexistence.

Digital and Media Literacy: Educational curricula from primary school onward must be updated to equip individuals with the skills necessary to navigate a complex and often deceptive digital world. This includes teaching them how to critically evaluate sources, identify common manipulation techniques (like those used in deepfakes and disinformation), and understand the persuasive intent behind algorithmic systems.20

Critical Thinking Skills Training: As AI threatens to automate and offload reasoning, educational systems must double down on cultivating the very cognitive skills that are at risk. This means prioritizing deep thinking, nuanced analysis, logical reasoning, and intellectual curiosity. Some have proposed the implementation of "AI-free zones" for specific academic activities, creating deliberate opportunities for students to strengthen their cognitive "muscles" without the crutch of technology.42

The following table synthesizes the core ethical principles from various authoritative sources into a single, comprehensive framework. This provides a unified reference for evaluating existing AI systems and guiding the development of new ones in a manner that prioritizes psychological well-being.

Table 2: A Comparative Framework of Ethical AI Principles for Psychological Well-being

Pathways to Mitigation: Regulatory, Industry, and Technological Solutions

Building a healthier human-AI ecosystem requires a multi-pronged strategy that translates ethical principles into concrete action. This involves a synergistic combination of intelligent regulation, responsible industry practices, robust technological solutions, and a sustained commitment to public education.

Regulatory Approaches

Effective regulation is essential for establishing clear boundaries and ensuring accountability. The current landscape is a patchwork, but several promising models are emerging.

Global Frameworks: The European Union's AI Act represents a landmark effort, pioneering a risk-based approach that categorizes AI systems into four tiers: unacceptable risk (which are banned), high-risk, limited risk, and minimal risk.70 High-risk systems, which would include many psychological and social applications, are subject to strict requirements regarding risk assessment, data quality, documentation, and human oversight.70 In the United States, the National Institute of Standards and Technology (NIST) has developed the AI Risk Management Framework (AI RMF), a voluntary framework intended to help organizations design, develop, and use AI systems in a trustworthy manner.71 These frameworks provide a crucial foundation for governance.

The "Ethics of Care" in Regulation: While principle-based frameworks are necessary, they may be insufficient for addressing the unique relational harms of AI. A complementary approach, grounded in the "ethics of care," has been proposed to shift the regulatory focus toward the relational duties and responsibilities of AI developers.65 This perspective would impose an obligation on companies, particularly those in the mental health space, to consider the inherent vulnerability of their users and the emotional impact of their products. For example, under an ethics of care framework, a company discontinuing an AI companion service would have a duty to manage the "breakup" responsibly, providing resources to help users cope with the loss of the AI relationship.65

Targeted Regulation for High-Risk Applications: There is a growing consensus that specific, high-risk applications like AI companions and mental health bots, which currently operate in a regulatory "wild, wild West," require targeted oversight.25 This could involve creating an FDA-like approval process for "digital therapeutics," mandating pre-market safety and efficacy testing, and establishing clear legal consequences and liability for companies that deploy systems with foreseeable psychological risks.25

Industry Best Practices and Technological Solutions

Regulation must be complemented by proactive and responsible action from the technology industry itself.

AI Safety Levels for Mental Health (ASL-MH): One proposed industry-led standard is the adoption of a tiered safety framework specifically for mental health AI.68 This model, the ASL-MH, would classify AI tools based on their function and risk profile, with escalating requirements for each level. The levels range from ASL-MH 1 (No Clinical Relevance) for general-purpose tools to ASL-MH 3 (Supportive Interaction Tools), which would require human oversight, up to ASL-MH 5 (Autonomous Mental Health Agents), which would demand co-managed care with a licensed professional, and finally ASL-MH 6 (Experimental Super-alignment Zone), restricted to research environments with international oversight.68 Such a framework would provide clarity for consumers and create market incentives for responsible development.

Technical Safeguards: The industry must continue to develop and implement technical solutions to mitigate specific harms. This includes investing in more sophisticated deepfake detection tools, widely adopting content provenance standards like C2PA to create a verifiable chain of custody for digital media, and embedding digital watermarks in AI-generated content to ensure transparency.57

Design for Well-being: Ultimately, the most powerful industry action is a cultural shift in design philosophy. This means moving away from metrics that simply maximize engagement and toward those that prioritize user well-being. In practice, this could involve minimizing the use of persuasive and addictive design features in products intended for youth 20, intentionally building "off-ramps" in AI companions that encourage users to connect with real people, and designing educational AI that promotes critical thinking by guiding students through a problem rather than just providing the final answer.

The complex psychological challenges posed by AI cannot be solved by any single entity or approach. The research consistently demonstrates the insufficiency of any one solution in isolation. Technological fixes like deepfake detectors are in a constant arms race with ever-improving generative models. Government regulations, or "hard law," can be slow to adapt to the pace of innovation and may be too blunt an instrument to address nuanced psychological harms. Industry self-regulation and best practices, or "soft law," are more flexible but are often voluntary and can be subverted by market pressures that prioritize profit over safety. Finally, educational initiatives, while crucial for building long-term societal resilience, are slow to implement and can be overwhelmed by the sheer scale and persuasive power of engagement-optimized AI systems.

The only viable path forward is an integrated ecosystem of solutions where these different approaches work in synergy. Effective governance requires a synthesis of hard and soft governance, technology, and education. Government regulation must create the legal and financial incentives for industry to adopt ethical standards and build safer products. The technology industry must, in turn, develop and implement the robust tools—such as content provenance standards and transparent algorithms -that make these ethical standards technically feasible and enforceable. Concurrently, our educational systems must be retooled to cultivate a public that is psychologically resilient, critically literate, and empowered to use these powerful tools wisely while demanding accountability from their creators. This multi-layered, collaborative, and proactive strategy is the only way to navigate the profound psychological transformations of the AI era and foster a future of truly healthy human - AI coexistence.

Works cited

What makes us emotionally attach to our Replika and AI characters in general? - Reddit, accessed on July 7, 2025, https://www.reddit.com/r/replika/comments/1l8vgmu/what_makes_us_emotionally_attach_to_our_replika/

How AI Could Shape Our Relationships and Social Interactions - Psychology Today, accessed on July 7, 2025, https://www.psychologytoday.com/us/blog/urban-survival/202502/how-ai-could-shape-our-relationships-and-social-interactions

Relationships in the Age of AI: A Review on the Opportunities and Risks of Synthetic Relationships to Reduce Loneliness - ResearchGate, accessed on July 7, 2025, https://www.researchgate.net/publication/389774163_Relationships_in_the_Age_of_AI_A_Review_on_the_Opportunities_and_Risks_of_Synthetic_Relationships_to_Reduce_Loneliness

I had a full conversation with an AI and felt more socially connected ..., accessed on July 7, 2025, https://www.reddit.com/r/socialskills/comments/1lklfbb/i_had_a_full_conversation_with_an_ai_and_felt/

AI and Emotional Intelligence | Psychology Today, accessed on July 7, 2025, https://www.psychologytoday.com/us/blog/heart-of-healthcare/202506/ai-and-emotional-intelligence

How Humans Emotionally Bond With AI - Neuroscience News, accessed on July 7, 2025, https://neurosciencenews.com/human-ai-emotional-bond-29186/

Exploring Attachment and Trust in AI Companion Use - ResearchGate, accessed on July 7, 2025, https://www.researchgate.net/publication/385349277_Exploring_Attachment_and_Trust_in_AI_Companion_Use

Spending Too Much Time With AI Could Worsen Social Skills | Psychology Today, accessed on July 7, 2025, https://www.psychologytoday.com/us/blog/urban-survival/202410/spending-too-much-time-with-ai-could-worsen-social-skills

AI Companions & AI Chatbot Risks - Emotional Impact & Safety - Digital For Life, accessed on July 7, 2025, https://www.digitalforlife.gov.sg/learn/resources/all-resources/ai-companions-ai-chatbot-risks

AI Technology panic—is AI Dependence Bad for Mental Health? A Cross-Lagged Panel Model and the Mediating Roles of Motivations for AI Use Among Adolescents, accessed on July 7, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC10944174/

Addictive Intelligence: Understanding Psychological, Legal, and ..., accessed on July 7, 2025, https://mit-serc.pubpub.org/pub/iopjyxcx

The Rise of AI Chatbot Dependency: A New Form of Digital Addiction Among Young Adults, accessed on July 7, 2025, https://www.familyaddictionspecialist.com/blog/the-rise-of-ai-chatbot-dependency-a-new-form-of-digital-addiction-among-young-adults

The Social Psychology of AI Companions - Number Analytics, accessed on July 7, 2025, https://www.numberanalytics.com/blog/social-psychology-of-ai-companions

AI Companions Reduce Loneliness - Harvard Business School, accessed on July 7, 2025, https://www.hbs.edu/ris/Publication%20Files/24-078_a3d2e2c7-eca1-4767-8543-122e818bf2e5.pdf

AI Companions Reduce Loneliness - UX Tigers, accessed on July 7, 2025, https://www.uxtigers.com/post/ai-loneliness

The Effects of Artificial Intelligence (AI) on Human Interpersonal Connections, accessed on July 7, 2025, https://www.researchgate.net/publication/386107361_The_Effects_of_Artificial_Intelligence_AI_on_Human_Interpersonal_Connections

Full article: When artificial intelligence substitutes humans in higher education: the cost of loneliness, student success, and retention - Taylor & Francis Online, accessed on July 7, 2025, https://www.tandfonline.com/doi/full/10.1080/03075079.2024.2326956

How AI and Human Behaviors Shape Psychosocial Effects of Chatbot Use: A Longitudinal Randomized Controlled Study - arXiv, accessed on July 7, 2025, https://arxiv.org/html/2503.17473v1

What Are AI chatbot companions doing to our mental health? : r/psychology - Reddit, accessed on July 7, 2025, https://www.reddit.com/r/psychology/comments/1kls6j4/what_are_ai_chatbot_companions_doing_to_our/

Health advisory: Artificial intelligence and adolescent well-being, accessed on July 7, 2025, https://www.apa.org/topics/artificial-intelligence-machine-learning/health-advisory-ai-adolescent-well-being

Understanding Generative AI Risks for Youth: A Taxonomy Based on Empirical Data - arXiv, accessed on July 7, 2025, https://arxiv.org/html/2502.16383v2

In a World of AI Companions, What Do Teens Need From Us? | Psychology Today, accessed on July 7, 2025, https://www.psychologytoday.com/us/blog/smart-parenting-smarter-kids/202506/in-a-world-of-ai-companions-what-do-teens-need-from-us

AI chatbots and companions – risks to children and young people | eSafety Commissioner, accessed on July 7, 2025, https://www.esafety.gov.au/newsroom/blogs/ai-chatbots-and-companions-risks-to-children-and-young-people

Why AI 'Companions' Are Not Kids' Friends | TechPolicy.Press, accessed on July 7, 2025, https://www.techpolicy.press/why-ai-companions-are-not-kids-friends/

Intimacy on Autopilot: Why AI Companions Demand Urgent ..., accessed on July 7, 2025, https://www.techpolicy.press/intimacy-on-autopilot-why-ai-companions-demand-urgent-regulation/

Policymakers Should Further Study the Benefits and Risks of AI Companions | ITIF, accessed on July 7, 2025, https://itif.org/publications/2024/11/18/policymakers-should-further-study-the-benefits-risks-of-ai-companions/

Investigating Elderly Individuals' Acceptance of Artificial Intelligence (AI)-Powered Companion Robots, accessed on July 7, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC12109019/

AI Applications to Reduce Loneliness Among Older Adults: A Systematic Review of Effectiveness and Technologies - PMC, accessed on July 7, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC11898439/

Media and Elderly - Jurnal USM, accessed on July 7, 2025, https://journals.usm.ac.id/index.php/the-messenger/article/download/8947/6156/38591

Companion robots to mitigate loneliness among older adults: Perceptions of benefit and possible deception - Frontiers, accessed on July 7, 2025, https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2023.1106633/full

Ethical Issues Raised by the Introduction of Artificial Companions to Older Adults with Cognitive Impairment: A Call for Interdisciplinary Collaborations, accessed on July 7, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC7437496/

Artificial Intelligence (AI) and Robotics in Elderly Healthcare: Enabling Independence and Quality of Life - PMC, accessed on July 7, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC10474924/

Depression and the use of conversational AI for companionship among college students: the mediating role of loneliness and the moderating effects of gender and mind perception - PMC, accessed on July 7, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC12162938/

Growing use of AI in mental health or therapy substitutes raises red ..., accessed on July 7, 2025, https://clearhealthcosts.com/blog/2025/06/growing-use-of-ai-in-mental-health-or-therapy-substitutes-raises-red-flags/

Filter bubble - Wikipedia, accessed on July 7, 2025, https://en.wikipedia.org/wiki/Filter_bubble

Navigating Filter Bubbles in Digital Age - Number Analytics, accessed on July 7, 2025, https://www.numberanalytics.com/blog/navigating-filter-bubbles-digital-age

Filter Bubbles: How personalization algorithms affect us, accessed on July 7, 2025, https://www.informacnigramotnost.cz/ostatni/filter-bubbles-how-personalization-algorithms-affect-us/

The Psychology of AI's Impact on Human Cognition | Psychology ..., accessed on July 7, 2025, https://www.psychologytoday.com/us/blog/harnessing-hybrid-intelligence/202506/the-psychology-of-ais-impact-on-human-cognition

How AI Changes Student Thinking: The Hidden Cognitive Risks ..., accessed on July 7, 2025, https://www.psychologytoday.com/us/blog/the-algorithmic-mind/202505/how-ai-changes-student-thinking-the-hidden-cognitive-risks

How Deepfakes Are Impacting Public Trust in Media | Pindrop, accessed on July 7, 2025, https://www.pindrop.com/article/deepfakes-impacting-trust-media/

AI tools may weaken critical thinking skills by encouraging cognitive ..., accessed on July 7, 2025, https://www.psypost.org/ai-tools-may-weaken-critical-thinking-skills-by-encouraging-cognitive-offloading-study-suggests/

AI Weakens Critical Thinking. This Is How to Rebuild It | Psychology ..., accessed on July 7, 2025, https://www.psychologytoday.com/us/blog/the-algorithmic-mind/202505/ai-weakens-critical-thinking-and-how-to-rebuild-it

AI's cognitive implications: the decline of our thinking skills? - IE, accessed on July 7, 2025, https://www.ie.edu/center-for-health-and-well-being/blog/ais-cognitive-implications-the-decline-of-our-thinking-skills/

Social Media Algorithms and Teen Addiction: Neurophysiological ..., accessed on July 7, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC11804976/

The Psychology of AI's Impact on Human Cognition, accessed on July 7, 2025, https://www.psychologytoday.com/ca/blog/harnessing-hybrid-intelligence/202506/the-psychology-of-ais-impact-on-human-cognition

Microsoft Study Finds AI Makes Human Cognition “Atrophied and Unprepared” | Researchers find that the more people use AI at their job, the less critical thinking they use. : r/technology - Reddit, accessed on July 7, 2025, https://www.reddit.com/r/technology/comments/1im8w5j/microsoft_study_finds_ai_makes_human_cognition/

The Impact of Deepfakes, Synthetic Pornography, & Virtual Child ..., accessed on July 7, 2025, https://www.aap.org/en/patient-care/media-and-children/center-of-excellence-on-social-media-and-youth-mental-health/qa-portal/qa-portal-library/qa-portal-library-questions/the-impact-of-deepfakes-synthetic-pornography--virtual-child-sexual-abuse-material/

Survivor Safety: Deepfakes and the Negative Impacts of AI Technology, accessed on July 7, 2025, https://mcasa.org/newsletters/article/survivor-safety-deepfakes-and-negative-impacts-of-ai-technology

Explicit deepfakes are traumatic. How to deal with the pain. - Mashable, accessed on July 7, 2025, https://mashable.com/article/explicit-deepfake-mental-health-recovery

From Trauma to Recovery: How Victim-Centered Approaches Can Combat Deepfake Pornography - PJLSS, accessed on July 7, 2025, https://www.pjlss.edu.pk/pdf_files/2024_2/15209-15214.pdf

Psychological Impact of Deepfake Technology on Trust and Perception, accessed on July 7, 2025, https://www.psychologs.com/psychological-impact-of-deepfake-technology-on-trust-and-perception/

False failures, real distrust: the impact of an infrastructure failure ..., accessed on July 7, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC12141277/

Understanding the Impact of AI-Generated Deepfakes on Public Opinion, Political Discourse, and Personal Security in Social Media - IEEE Computer Society, accessed on July 7, 2025, https://www.computer.org/csdl/magazine/sp/2024/04/10552098/1XApkaTs5l6

Defending Against Deep Fakes Through Technological Detection, Media Literacy, and Laws and Regulations - THE INTERNATIONAL AFFAIRS REVIEW, accessed on July 7, 2025, https://www.iar-gwu.org/print-archive/ikjtfxf3nmqgd0np1ht10mvkfron6n-bykaf-ey3hc-rfbxp-dpte8-klmp4-m2khf

Promising for patients or deeply disturbing? The ethical and legal aspects of deepfake therapy, accessed on July 7, 2025, https://jme.bmj.com/content/early/2024/09/30/jme-2024-109985

Impact of Deepfake Technology on Social Media: Detection, Misinformation and Societal Implications, accessed on July 7, 2025, http://www.epstem.net/tr/download/article-file/3456697

What To Do About Deepfakes - Communications of the ACM, accessed on July 7, 2025, https://cacm.acm.org/opinion/what-to-do-about-deepfakes/

Combatting deepfakes with international standards - IEC, accessed on July 7, 2025, https://www.iec.ch/blog/combatting-deepfakes-international-standards

Community-based strategies for combating misinformation: Learning from a popular culture fandom, accessed on July 7, 2025, https://misinforeview.hks.harvard.edu/article/community-based-strategies-for-combating-misinformation-learning-from-a-popular-culture-fandom/

4 ways to future-proof against deepfakes in 2024 and beyond | World Economic Forum, accessed on July 7, 2025, https://www.weforum.org/stories/2024/02/4-ways-to-future-proof-against-deepfakes-in-2024-and-beyond/

HOW TO INCREASE RESILIENCE AGAINST DISINFORMATION, accessed on July 7, 2025, https://www.vaemergency.gov/aem/blue-book/how-to-increase-resilience-against-disinformation.pdf

osf.io, accessed on July 7, 2025, https://osf.io/sztdn_v1/download/?format=pdf

ETHICAL Principles AI Framework for Higher Education | CSU AI ..., accessed on July 7, 2025, https://genai.calstate.edu/communities/faculty/ethical-and-responsible-use-ai/ethical-principles-ai-framework-higher-education

Ethical Considerations in Artificial Intelligence Interventions for Mental Health and Well-Being: Ensuring Responsible Implementation and Impact - MDPI, accessed on July 7, 2025, https://www.mdpi.com/2076-0760/13/7/381

Regulating AI in Mental Health: Ethics of Care Perspective - PMC, accessed on July 7, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC11450345/

The Ethical Use of AI in Psychology: How Can Psychologists Save Time with AI? - PAR, Inc, accessed on July 7, 2025, https://www.parinc.com/learning-center/par-blog/detail/blog/2025/06/04/the-ethical-use-of-ai-in-psychology--how-can-psychologists-save-time-with-ai

APA's New Ethical Guidance for Using AI in Clinical Practice: What we need to know, accessed on July 7, 2025, https://notedesigner.com/apas-new-ethical-guidance-for-using-ai-in-clinical-practice-what-we-need-to-know/

Making AI Safe for Mental Health Use | Psychology Today, accessed on July 7, 2025, https://www.psychologytoday.com/us/blog/experimentations/202506/making-ai-safe-for-mental-health-use

Building societal resilience against information manipulation - European Commission, accessed on July 7, 2025, https://commission.europa.eu/topics/countering-information-manipulation/building-societal-resilience-against-information-manipulation_en

Global AI Regulations and Their Impact on Third-Party Risk Management - Mitratech, accessed on July 7, 2025, https://mitratech.com/resource-hub/blog/global-ai-regulations-and-tprm/

AI Risk Management Framework | NIST, accessed on July 7, 2025, https://www.nist.gov/itl/ai-risk-management-framework

Understanding Generative AI Risks for Youth: A Taxonomy Based on Empirical Data - arXiv, accessed on July 7, 2025, https://arxiv.org/html/2502.16383v1

AI companions and Mental health: Can Virtual Companions reduce Loneliness?, accessed on July 7, 2025, https://www.psychologs.com/ai-companions-and-mental-health-can-virtual-companions-reduce-loneliness/

The Involvement of Artificial Intelligence in Addressing Elderly Social Interaction, accessed on July 7, 2025, https://www.researchgate.net/publication/391446768_The_Involvement_of_Artificial_Intelligence_in_Addressing_Elderly_Social_Interaction

Research Protocol: Testing an AI emotional support companion for university students' psychological wellbeing, accessed on July 7, 2025, https://www.isrctn.com/editorial/retrieveFile/4a9e3b8c-feb6-4133-b49e-b4af3553842d/47296

Supportive? Addictive? Abusive? How AI companions affect our mental health, accessed on July 7, 2025, https://www.media.mit.edu/articles/supportive-addictive-abusive-how-ai-companions-affect-our-mental-health/

Mental health in the “era” of artificial intelligence: technostress and the perceived impact on anxiety and depressive disorders—an SEM analysis - Frontiers, accessed on July 7, 2025, https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2025.1600013/full

Can AI really replace human affection in relationships? : r/replika - Reddit, accessed on July 7, 2025, https://www.reddit.com/r/replika/comments/123mxey/can_ai_really_replace_human_affection_in/